Today I finished a pack of 100 coffee filters; that wouldn’t normally be much of a milestone, but this is the first pack of filters I’ve finished since moving to the US. After three months in San Francisco we’re feeling quite settled now, but through the stresses of moving, sorting out paperwork, and learning to live in a new city and country, making my morning coffee has been a reassuringly routine part of each day.

Australia and the US are similar in a lot of ways, of course, but we’ve still had to do a surprising amount of adjustment — much more than I expected. Of those adjustments, though, I think apartment living has probably been the easiest. We worried a little about moving in to a high-rise apartment building, especially given that we had to sign a lease before seeing our apartment, but it’s turned out very well; the building is great, and our apartment is quiet and comfortable, and seems to have plenty of room despite being smaller than our old house. Our two cats moved with us, and they seem to love it too!

Living without a car has also been surprisingly easy. Between Amazon and Google Shopping Express there’s not a lot we can’t get delivered, and San Francisco’s public transport has been pretty good, even if most of it is by bus. We’re also right near where the cable cars meet, and while the Powell-Hyde and Powell-Mason lines are usually too busy to catch, we can (and do!) often catch the California cable car, doesn’t get old. For the times we’ve needed a car around town, we’ve used UberX, which is super-convenient and (generally) cheaper than a taxi.

On the other hand, shopping has at times been a baffling ordeal; the common refrain is our house is “everything is slightly different and I don’t like it!”. It seems trivial, but having to find a new favourite brand of everything all at once, it really is a hassle. Even basic produce is a problem — good lamb has been hard to find, and this weekend we struggled to find a pork roast that still had its skin on (curse you, health nuts!). We’ve also had zero luck finding good Greek food, and little more success finding a good bahn mi like we’d have back in Melbourne.

It’s not all bad, though! Good Mexican food (and food from countless other Latin American countries, too) is cheap and plentiful, and it’s impossible to walk more than 5 metres without tripping over great bread, ice cream, or craft beers. Whole Foods is a great place to shop even if it does indulge in a lot of psuedoscientific crap, and Trader Joe’s is strangely awesome (especially the cookie butter!). This really is a great town to eat and drink in, both at home and out-and-about — we’ve just had to accept that food is different here, and learn how to make the most of that while we’re here.

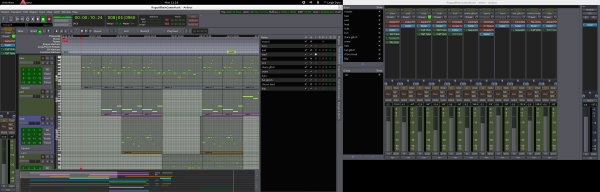

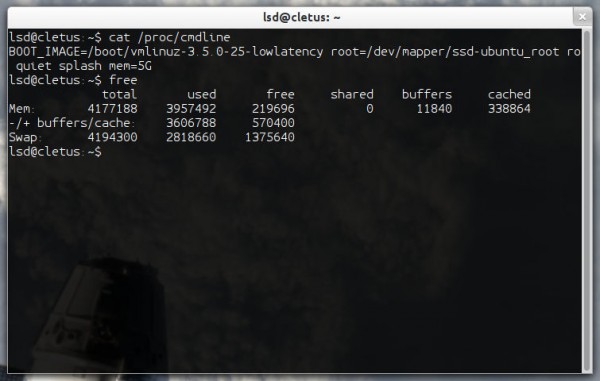

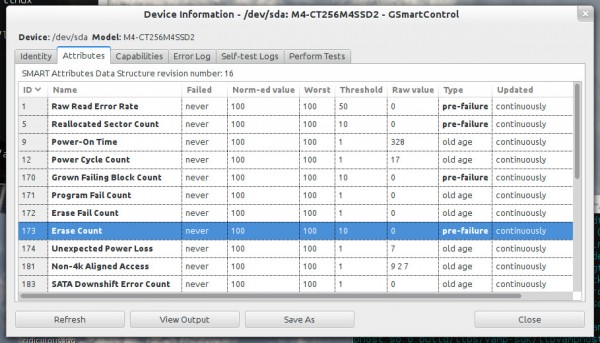

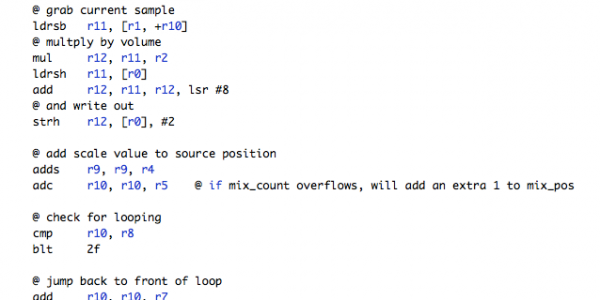

Now that we’re settling in, I have time to get back to my hobbies (beyond exploring our new city, of course!); I haven’t done anything musical, but I’m starting Japanese classes again shortly. I’ve also built a new HTPC with a decent CPU and video card so it can do double-duty as a gaming system, running Steam in Big Picture mode and playing Wii games under Dolphin.

Well, that’s one box of coffee filters down, but I’m sure there’ll be many more to come!